China GEO: Unlocking the ‘Knowledge Source’ of China’s AI Large Models

- On July 18, 2025

- china geo, china large language models

China’s AI large model ecosystem is experiencing rapid development, presenting a diversified landscape dominated by “giants, rising stars, and industry deep-divers.” The leap in these models’ capabilities is supported by massive corpora, whose data source preferences and construction methods profoundly influence the models’ characteristics and application potential. For Western businesses, understanding the “knowledge sources” of these Chinese AI large models is crucial, not only for market competition but also for effectively leveraging AI technology to create new business opportunities.

This report deeply analyzes the composition and corpus sources of mainstream Chinese AI large models, revealing their unique advantages in Chinese comprehension, industry applications, and cultural adaptability. The report emphasizes that China has adopted a national strategic approach to corpus construction, aiming to build high-quality, authoritative, and publicly accessible Chinese corpora, which lays the foundation for the deep penetration of Chinese large models in the domestic market.

To help Western businesses navigate this trend, the report proposes three core strategies: First, clarifying the applicable scenarios and synergistic effects of Retrieval-Augmented Generation (RAG) and Large Language Model Fine-tuning to enhance the accuracy and timeliness of internal knowledge management and external interactions; Second, detailing the practical methods of Generative Search Engine Optimization (GEO), guiding businesses on how to organize and publish high-quality content to achieve higher visibility and recommendation in AI search results, thereby effectively reaching target users; Finally, through cross-industry cases, the report specifically demonstrates the application potential of AI large models in finance, healthcare, manufacturing, and retail, and offers advice to Western businesses on data security, privacy protection, intellectual property, and ethical norms, envisioning an AI-driven business future.

Introduction: Navigating China’s AI Frontier

China’s artificial intelligence large model ecosystem is evolving at an astonishing pace, characterized by a diverse landscape of tech giants, innovative startups, and vertical-specific models. This dynamic environment presents both immense opportunities and complex challenges for Western businesses seeking to enter or compete within the Chinese market. These models’ ability to process and generate human-like text, understand complex queries, and even perform multimodal tasks makes them powerful tools for various business applications, from marketing to customer service and internal knowledge management.

This report aims to demystify the “knowledge sources” underpinning mainstream Chinese AI large models. It will provide Western business operators and marketers with a clear understanding of how these models are trained, what data they prefer, and most importantly, offer concrete, actionable strategies—with a particular focus on Generative Search Engine Optimization (GEO)—to ensure their business information is effectively recognized, utilized, and presented by these AI systems.

I. Overview of China’s Mainstream AI Large Model Ecosystem

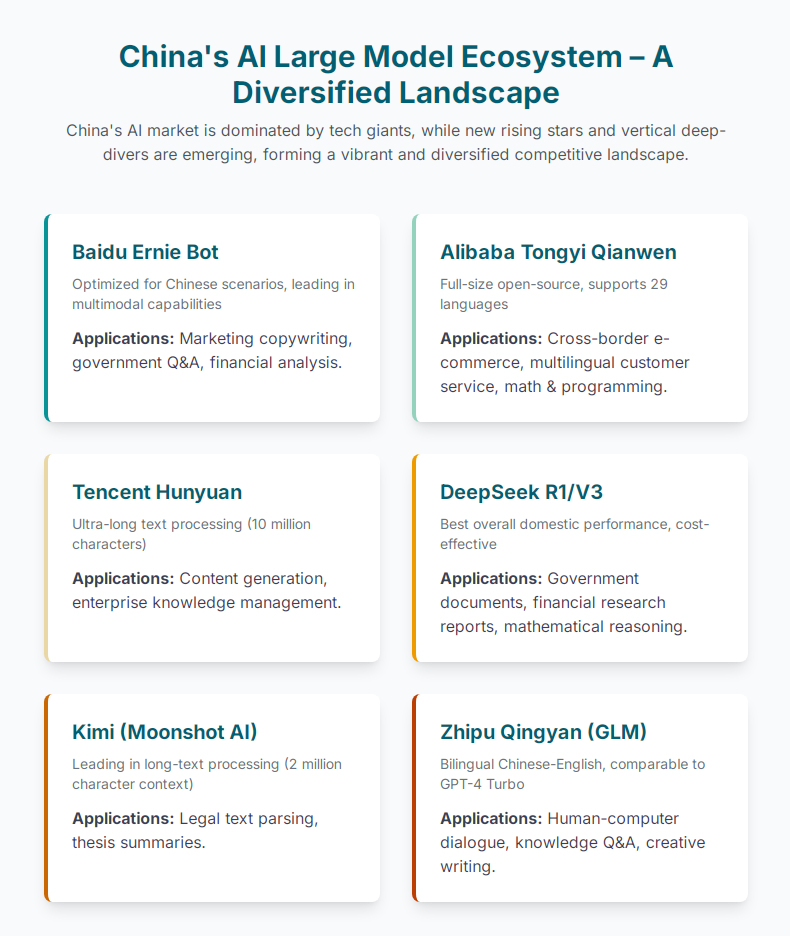

As of July 2025, China’s AI large language model ecosystem is developing rapidly, presenting a diversified pattern of “giant dominance + rising stars + industry deep cultivation”.

A. Giant Dominance: Market Landscape and Core Models

China’s AI large model landscape is primarily led by leading technology companies, whose models form the foundation for various applications across industries.

- Baidu Ernie Bot (Wenxin Yiyan): This model is optimized for Chinese scenarios, leading in multimodal capabilities (text, image, voice), and excelling in emotional recognition. Its main application scenarios include marketing copywriting, government Q&A, and financial analysis. Ernie Bot is based on Baidu’s ERNIE model series within the Wenxin NLP large model, possessing capabilities in language understanding, dialogue generation, and literary creation.

- Alibaba Tongyi Qianwen (Qwen): Developed by Alibaba, known for its full-size open-source models and support for 29 languages, excelling in mathematics and programming. It is widely used in cross-border e-commerce, multilingual customer service, and supply chain optimization. Tongyi Qianwen, as a causal language model, has undergone pre-training and instruction fine-tuning, and its multilingual support indicates a large and diverse corpus. In enterprise applications, it can utilize local knowledge bases for vectorization, retrieve relevant knowledge points, and combine them with queries to construct prompts for precise Q&A.

- ByteDance Doubao: ByteDance’s model, using a sparse MoE architecture for efficient training, boasts strong real-time voice interaction capabilities and rich multimodal generation. Primarily used for mobile assistants, short video scripts, and patient education.

- iFlytek Spark (Xunfei Xinghuo): Developed by iFlytek, it excels in multimodal fusion and is deeply applied in education and healthcare scenarios, supporting 72 dialect voice recognitions. Its applications include smart education, medical consultation, and industrial quality inspection. Spark’s training process involves uploading language text training datasets and test sets from industry fields for automated training and effect testing. This indicates its high reliance on specialized domain datasets.

- Tencent Hunyuan: Developed by Tencent, it specializes in ultra-long text processing (up to 10 million characters) and has strong Chinese creation and logical reasoning capabilities. Its core applications include content generation, social entertainment, and enterprise knowledge management. The model supports 15-18 language translations, implying a broad and multilingual corpus.

- Huawei Pangu: Optimized in synergy with Ascend chips, it offers high accuracy in industrial implementation and is China’s first hundred-billion-parameter Chinese NLP model. Primarily applied in industrial quality inspection, financial risk control, and scientific research simulation. Different versions of the Pangu-NLP model (N1-N4) are specialized for text understanding, general tasks, industry applications, and logical reasoning, indicating that it incorporates a large amount of industry-specific data on top of general corpora.

- 360 Zhinao (360 Brain): Developed by 360 Group, it excels in Chinese multidisciplinary balance, especially in security and enterprise knowledge management. Applied in security monitoring, enterprise knowledge bases, and industry reports.

B. Rising Stars and Vertical Deep Cultivation

In addition to tech giants, China’s AI landscape has seen the emergence of many fast-rising new forces and models focused on specific domains, continuously pushing technological boundaries and meeting segmented market demands.

- DeepSeek R1 / V3: Positioned as China’s best overall performing model, with a 3x increase in inference speed and training costs only 1/27 of GPT-4. Its applications include government documents, financial research reports, and mathematical reasoning. DeepSeek employs a hybrid attention mechanism, showing significant advantages in Chinese context understanding, and its training corpus includes a large number of classic Chinese literary works (such as “The Three-Body Problem” and 41 other titles), giving it an inherent advantage in cultural heritage. Additionally, it was trained on 45TB of data, nearly a trillion words, and billions of lines of source code, demonstrating its massive scale.

- Kimi (Moonshot AI): Leads in long-text processing (2 million character context) and excels in legal and scientific research analysis; Kimi K2 supports Agent tasks, outperforming GPT-4.1. Primarily used for legal text parsing, thesis summaries, and travel planning.

- Zhipu Qingyan (GLM): Developed by Zhipu AI, it is a bilingual Chinese-English hundred-billion-parameter model whose language and knowledge capabilities are comparable to GPT-4 Turbo. Applied in human-computer dialogue, knowledge Q&A, and creative writing. Zhipu Qingyan is developed based on Zhipu AI’s foundational large model, pre-trained with trillions of characters of text and code, combined with supervised fine-tuning techniques. It also focuses on “advanced retrieval” from websites like CNKI, Zhihu, and Xiaohongshu, indicating that its corpus prioritizes high-quality, diverse, and potentially user-generated content from popular Chinese platforms.

- MiniMax (ABAB): Features a multimodal foundational architecture (text-to-speech/vision), and its general large model ABAB supports enterprise customization. Applied in game NPCs, virtual humans, and intelligent customer service.

- Baichuan Intelligent (Baichuan): Offers open-source commercially available models (Baichuan-7B/13B), with cumulative downloads exceeding 6 million times. Primarily used for lightweight deployment and NLP solutions for small and medium-sized enterprises. Baichuan Intelligent has open-sourced intermediate weights for the entire training process from 200B to 2640B data, demonstrating its commitment to transparency and community research. Its strong capabilities in role-playing and customized character responses indicate that its corpus is rich in dialogue and colloquial data.

- 01.AI (Yi): Developed by Kai-Fu Lee’s team, it excels in multilingual optimization and has significant influence in the open-source ecosystem. Applied in cross-language research and edge device deployment. Its API structure for dialogue messages also suggests its training focuses on dialogue data.

- Vertical Industry and University Models: These models focus on specific domains, leveraging deep industry knowledge. For example, Shanghai AI Lab’s InternLM (Shusheng Puyu) focuses on academic research, Tsinghua University’s ChatGLM-6B is a lightweight open-source model, CASIA’s Zidong Taichu is a multimodal general platform, Ronhe Cloud’s Chitu large model specializes in enterprise customer service and marketing, China Mobile’s Jiutian Customer Service large model serves telecommunications, China Unicom’s Yuanjing large model focuses on AI terminal integration, and Inspur Group’s Inspur Cloud HaiRuo large model deeply cultivates the medical and water conservancy industries.

Table 1: Overview of Mainstream Chinese AI Large Models

| Model Name | Developer | Core Features | Main Application Scenarios |

|---|---|---|---|

| Baidu Ernie Bot | Baidu | Optimized for Chinese scenarios, leading in multimodal, excelling in emotional recognition | Marketing copywriting, government Q&A, financial analysis |

| Tongyi Qianwen | Alibaba | Full-size open-source, multilingual support (29 languages), top in mathematics and programming | Cross-border e-commerce, multilingual customer service, supply chain optimization |

| Doubao | ByteDance | Sparse MoE architecture, strong real-time voice interaction, rich multimodal generation | Mobile assistant, short video scripts, patient education |

| iFlytek Spark | iFlytek | Multimodal fusion, deep application in education and healthcare scenarios, supports 72 dialects | Smart education, medical consultation, industrial quality inspection |

| Tencent Hunyuan | Tencent | Ultra-long text processing (10 million characters), strong Chinese creation and logical reasoning | Content generation, social entertainment, enterprise knowledge management |

| Huawei Pangu | Huawei | Optimized in synergy with Ascend chips, high accuracy in industrial implementation, first hundred-billion-parameter Chinese NLP model | Industrial quality inspection, financial risk control, scientific research simulation |

| 360 Zhinao | 360 Group | Strong Chinese multidisciplinary balance, excelling in security and enterprise knowledge management | Security monitoring, enterprise knowledge base, industry reports |

| DeepSeek R1/V3 | DeepSeek | Best overall domestic performance, fast inference speed, low training cost | Government documents, financial research reports, mathematical reasoning |

| Kimi | Moonshot AI | Leading in long-text processing (2 million character context), strong in legal and scientific research analysis | Legal text parsing, thesis summaries, travel planning |

| Zhipu Qingyan | Zhipu AI | Bilingual Chinese-English hundred-billion-parameter model, language and knowledge capabilities comparable to GPT-4 Turbo | Human-computer dialogue, knowledge Q&A, creative writing |

| MiniMax | MiniMax | Multimodal foundational architecture, general large model supports enterprise customization | Game NPCs, virtual humans, intelligent customer service |

| Baichuan Intelligent | Baichuan Intelligent | Open-source commercially available, large download volume | Lightweight deployment, NLP solutions for small and medium-sized enterprises |

| 01.AI | Kai-Fu Lee’s Team | Excelling in multilingual optimization, significant influence in open-source ecosystem | Cross-language research, edge device deployment |

| InternLM | Shanghai AI Lab | Academic research | Chinese-English reading comprehension, complex reasoning tasks |

| ChatGLM-6B | Tsinghua University | Lightweight open-source | Local deployment on consumer graphics cards, education/research |

| Zidong Taichu | CASIA | Multimodal general platform | Industrial vision, cross-modal analysis |

| Chitu Large Model | Ronhe Cloud | Enterprise customer service and marketing | Intelligent conversation, script recommendation, knowledge base |

| Jiutian Customer Service Large Model | China Mobile | Telecommunications services | 24-hour intelligent butler, multi-turn interaction |

| Yuanjing Large Model | China Unicom | AI terminal integration | Smart hardware (e.g., “Tongtong AI” terminal) |

| Inspur Cloud HaiRuo Large Model | Inspur Group | Medical/Water Conservancy Industry | Electronic medical record generation, water conservancy scheduling optimization |

II. In-depth Analysis of Mainstream AI Large Model Corpus Sources

A. Common Characteristics of Large Model Training Corpora

The leap in AI large models’ capabilities fundamentally benefits from the scale of their training data, which enables “emergent abilities” – capabilities that do not exist in smaller models but appear in larger ones. Pre-training corpora are typically massive and unannotated, containing rich linguistic elements and complex structures. This unannotated data provides models with real, natural language usage scenarios, allowing them to learn the essential characteristics and underlying rules of language . Large-scale datasets can offer a wealth of linguistic phenomena and diverse contextual scenarios, enabling models to encounter various language structures, vocabulary usages, and grammatical rules during training.

Web data is the most common and widely used data type in pre-training corpora, primarily sourced from publicly available web pages regularly crawled and archived by non-profit organizations like Common Crawl. Common Crawl, as an open web crawl data repository, provides valuable resources for large-scale web content analysis, supporting activities such as search engine optimization, language model training, and social data research. However, Common Crawl’s raw data cannot be directly used for language model training because it aims to crawl the largest possible subset of all web pages without filtering content. Raw data contains a large amount of content unsuitable for training corpora, such as irrelevant information like advertisements and navigation bars; harmful content like pornography and violence; worthless content like machine-generated spam and advertisements; and sensitive information involving personal privacy. Therefore, many pre-training corpora are constructed by strictly filtering and de-duplicating Common Crawl data, such as the RefinedWeb dataset and the RedPajama-1T dataset. This strict requirement for data quality and cleanliness is crucial for effective model learning and avoiding “hallucinations.”

Book data also provides models with broad and in-depth content, often with a relatively complete classification system, which helps models learn a wide range of knowledge and structured information. When processing book data, it is usually necessary to remove metadata such as covers, tables of contents, and copyright pages, and filter out advertising content to ensure data balance. In addition, code datasets, such as The Stack dataset containing 385 programming languages and over 6TB of data, are essential for models to learn programming syntax and style, thereby enhancing their coding and logical reasoning capabilities.

B. Characteristics and Challenges of Chinese Large Model Corpus Construction

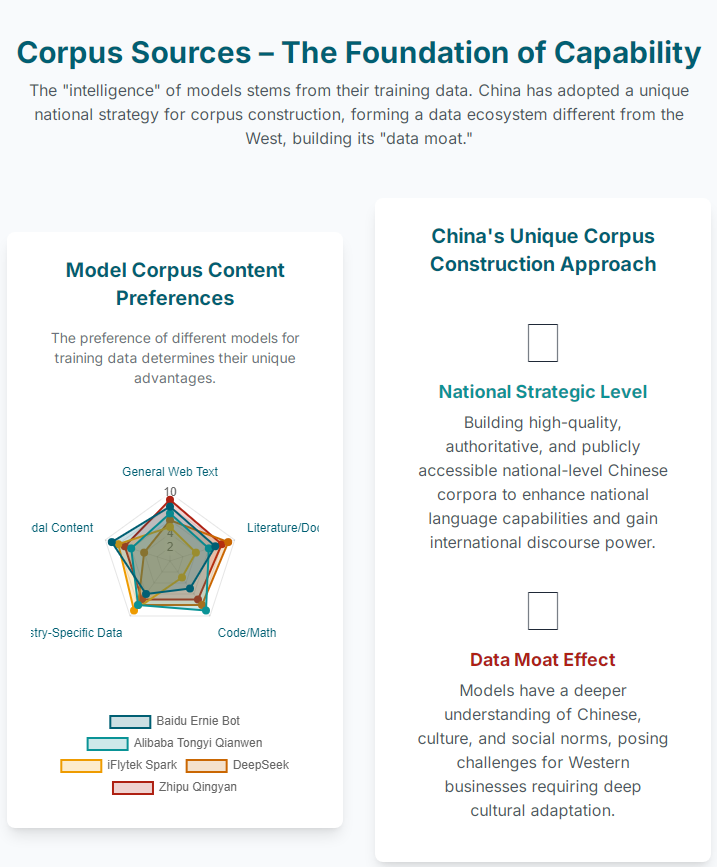

China exhibits unique characteristics and challenges in AI corpus construction, closely related to national strategy and cultural background.

National Strategy and Policy Guidance

China views AI corpus construction as a national strategy, aiming to gain the initiative, guidance, and discourse power in international public opinion. This includes relevant national agencies taking the lead in formulating guiding opinions, establishing a unified data standard system, review and evaluation system, and basic verification mechanism. By organically integrating existing domestic corpora, multi-source data integration is achieved, addressing data silo issues, and promoting open sharing. The Ministry of Education also emphasizes the implementation of national key corpus construction plans to build large-scale Chinese corpora to enhance national language capabilities.

This top-down, coordinated effort differs from the Western approach to large model development, which primarily relies on privately curated datasets or extensive web crawling. It is likely to lead to the rapid accumulation of high-quality Chinese language data that is highly aligned with Chinese culture and political stances. This means that Chinese large models may possess a deeper, more nuanced understanding of Chinese language, culture, and specific social norms than Western models, especially when dealing with subtle local contexts or specific ideological sensitivities. For Western businesses, this implies that interacting with Chinese AI platforms is not just a matter of technical integration but also of understanding and adapting to a data ecosystem shaped by national strategic goals. Content for Chinese AI platforms may require deep cultural and contextual adaptation, not just simple translation. This also suggests that Chinese models may have a “data moat” in their domestic market, making it difficult for Western models to compete in China solely on linguistic and cultural nuances.

Construction of High-Quality Chinese Datasets

China places great emphasis on the construction of high-quality Chinese datasets, which is crucial for training Chinese AI models. This includes efforts to digitize and share cultural heritage like oracle bone inscriptions, as well as multilingual digital dissemination plans for excellent Chinese cultural courses.

Corpus Content Preferences and Model Capability Mapping

Different Chinese large models exhibit varying preferences in corpus content, and these preferences directly shape their core capabilities and application scenarios:

- Baidu Ernie Bot: Based on Baidu’s ERNIE model series, focusing on language understanding, generation, dialogue, and literary creation. Its optimization for Chinese scenarios and multimodal capabilities indicates that its corpus includes general web text, literary works, and multimodal data.

- Tongyi Qianwen: As a causal language model, its multilingual support (29 languages) means its corpus is large, diverse, and multilingual. Byte-level Byte Pair Encoding tokenization technology demonstrates its robustness in language processing. In enterprise applications, it utilizes local knowledge bases for vectorization and storage, extracting relevant knowledge points and constructing prompts for Q&A, indicating its preference for structured and domain-specific data for fine-tuning.

- iFlytek Spark: Emphasizes multimodal interaction (image understanding, visual Q&A, audio/video generation) and deep integration in education and healthcare. Its training process involves uploading language text datasets from industry fields, indicating its high reliance on specialized, domain-specific datasets to support its vertical applications.

- Tencent Hunyuan: Supports 15-18 language translations, implying a broad and multilingual corpus. Its ultra-long text processing capability indicates that its corpus is rich in long documents, reports, and literary works.

- Huawei Pangu: Different versions of the Pangu-NLP model (N1-N4) are specialized for text understanding, general tasks, industry applications (through additional industry data pre-training), and logical reasoning, respectively. This indicates that for enterprise-level versions, a large amount of industry-specific datasets are added on top of general foundational corpora.

- Zhipu Qingyan: Developed based on Zhipu AI’s foundational large model, pre-trained with trillions of characters of text and code, combined with supervised fine-tuning techniques. It excels in general Q&A, multi-turn dialogue, creative writing, code generation, virtual dialogue, AI painting, and document and image interpretation. Its emphasis on “advanced retrieval” from academic (CNKI) and social (Zhihu, Xiaohongshu) platforms indicates that its corpus prioritizes high-quality, diverse, and potentially user-generated content from popular Chinese platforms.

- DeepSeek: Employs a hybrid attention mechanism, showing significant advantages in Chinese context understanding, especially in capturing subject-predicate structures. Its training corpus includes a large number of classic Chinese literary works (such as “The Three-Body Problem” and 41 other titles), giving it an inherent advantage in cultural heritage. Its training data volume is massive, including 45TB of data, nearly a trillion words, and billions of lines of source code.

- Baichuan Intelligent: Open-sourced intermediate weights for the entire training process from 200B to 2640B data. Its strong capabilities in role-playing and customized character responses indicate that its corpus is rich in dialogue and colloquial data.

- 01.AI: Excels in multilingual optimization, implying a diverse and multilingual corpus. Its API structure for dialogue messages also suggests its training focuses on dialogue data.

This specialization in domain-specific data is a significant trend in China’s large model ecosystem, moving beyond general models towards highly specialized, industry-specific AI. This specialization is directly achieved by feeding models high-quality, targeted domain data (e.g., medical literature, industrial reports, financial data). This allows models to achieve higher accuracy, relevance, and effectively reduce “hallucinations” within their specific domains, such as iFlytek’s medical applications or Huawei Pangu’s industrial precision. This shift from “pilot verification” to “value creation” clearly indicates that AI technology is deeply integrating into the core production processes of traditional industries, especially in government affairs, central state-owned enterprises, finance, healthcare, and manufacturing. For Western businesses, this trend means that general large models may not be sufficient to meet their deep integration needs in China. Businesses may need to: a) utilize these specialized Chinese models for specific tasks; b) invest resources to fine-tune general models with their proprietary domain data; or c) strategically publish highly specialized content on platforms preferred by these models to influence their knowledge bases, thereby enhancing the effectiveness of Generative Search Engine Optimization (GEO).

Table 2: Corpus Source Preferences and Capability Focus of Mainstream Chinese AI Large Models

| Model Name | Developer | Inferred Corpus Source Preference | Corresponding Capability Focus |

|---|---|---|---|

| Baidu Ernie Bot | Baidu | General web text, literary works, multimodal data | Chinese scenario optimization, multimodal, emotional recognition, literary creation |

| Tongyi Qianwen | Alibaba | Large-scale multilingual text, programming code, structured domain knowledge | Multilingual support, mathematics and programming, cross-border e-commerce, supply chain optimization |

| Doubao | ByteDance | Mobile dialogue, short video scripts, multimodal content | Real-time voice interaction, multimodal generation, mobile assistant |

| iFlytek Spark | iFlytek | Industry-specific language text (education, medical), multimodal data, dialect voice | Multimodal fusion, education and medical scenarios, dialect voice recognition |

| Tencent Hunyuan | Tencent | Ultra-long text, multilingual text | Ultra-long text processing, Chinese creation, logical reasoning, multilingual translation |

| Huawei Pangu | Huawei | Industrial data, financial data, scientific research data | High accuracy in industrial implementation, industrial quality inspection, financial risk control, scientific research simulation |

| 360 Zhinao | 360 Group | Chinese multidisciplinary text, security and enterprise knowledge management data | Chinese multidisciplinary balance, security monitoring, enterprise knowledge base |

| DeepSeek R1/V3 | DeepSeek | Classic Chinese literary works, government documents, financial research reports, mathematical code | Chinese context understanding, subject-predicate structure capture, mathematical reasoning, financial analysis |

| Kimi | Moonshot AI | Long text (legal, scientific papers) | Long text processing, legal and scientific research analysis |

| Zhipu Qingyan | Zhipu AI | Trillions of characters of text and code, CNKI/Zhihu/Xiaohongshu content | General Q&A, multi-turn dialogue, creative writing, code generation |

| MiniMax | MiniMax | Multimodal (text-to-speech/vision), dialogue data | Game NPCs, virtual humans, intelligent customer service |

| Baichuan Intelligent | Baichuan Intelligent | Large-scale general text, dialogue data, role-playing corpus | Lightweight deployment, multi-turn dialogue, role-playing |

| 01.AI | Kai-Fu Lee’s Team | Multilingual text | Cross-language research, edge device deployment |

| Vertical Industry/University Models | Various Institutions | Specific domains (academic, education, medical, telecommunications, industrial) | Domain-specific knowledge, specific scenario applications |

III. How Western Businesses Can Leverage AI Large Models to Create Opportunities

Western businesses need to adopt multi-level strategies when utilizing Chinese AI large models, from internal knowledge management to external market visibility, to comprehensively enhance AI application capabilities.

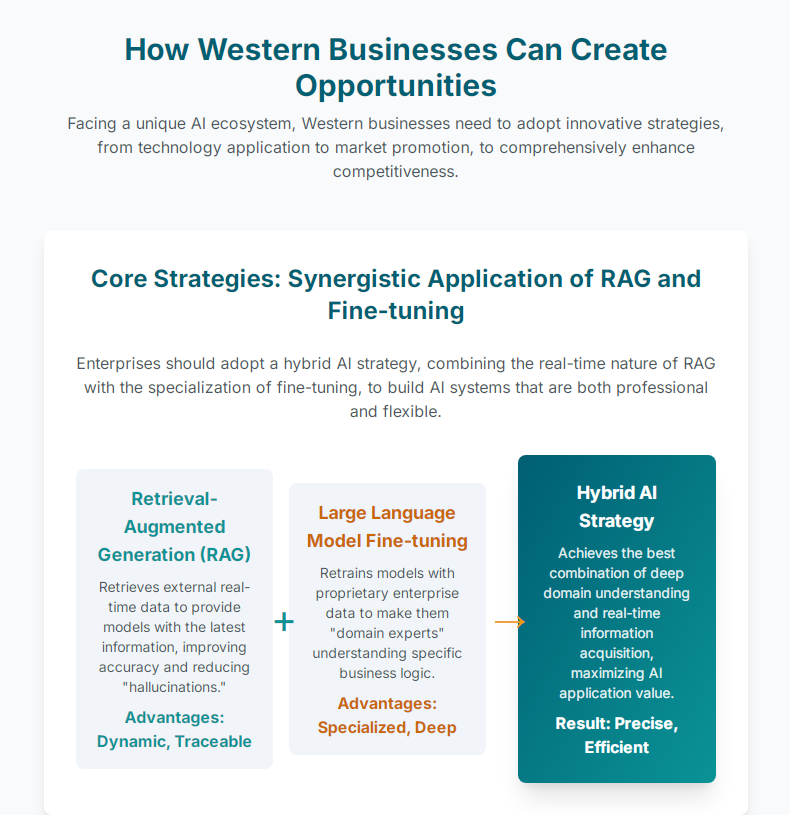

A. Core Strategies: The Choice and Application of RAG and Fine-tuning

When deploying AI large models, enterprises face the choice and combination of two core strategies: Retrieval-Augmented Generation (RAG) and Large Language Model Fine-tuning (LLM Fine-tuning).

1. Retrieval-Augmented Generation (RAG): Enhancing Information Accuracy and Timeliness

RAG is an advanced AI architecture that combines the strengths of traditional information retrieval systems (like search and databases) with the generative capabilities of Large Language Models (LLMs). When a user queries, the RAG system retrieves relevant information from external data sources, combines this information with the original query to form an augmented prompt, and then processes this augmented prompt through the LLM to generate more precise and timely responses. This method significantly improves the accuracy and relevance of LLM outputs, especially for knowledge-intensive tasks.

Key advantages for enterprises applying RAG include:

- Improved Accuracy and Reliability: RAG provides LLMs with external validation data, reducing “hallucination” phenomena and improving answer precision, especially for domain-specific questions.

- Dynamic Knowledge Base Updates: Unlike LLMs with fixed training knowledge, RAG allows models to retrieve the latest information in real-time, ensuring responses are up-to-date, which is crucial for rapidly changing industries or real-time information needs.

- Reduced Model Fine-tuning Costs: RAG enables models to understand specialized knowledge by retrieving external data, often without the need for time-consuming and costly model fine-tuning.

- Enhanced Traceability and Transparency: RAG cites specific sources when generating responses, making answers more traceable and enhancing model credibility.

- Data Sovereignty and Privacy: Sensitive data can be kept local while providing information to the LLM, avoiding data leakage risks, and information retrieval can be restricted based on authorization levels.

RAG has wide application scenarios in knowledge-intensive tasks, including:

- Customer Service: Programming chatbots with a deep understanding of specific documents to answer customer inquiries, thereby shortening problem resolution time and improving efficiency.

- Q&A Systems: Significantly improving the accuracy of Q&A systems, for example, in medical institutions, where systems can retrieve professional literature based on patient questions and generate diagnostic suggestions.

- E-commerce: Recommending personalized products based on consumer shopping history and preferences.

- Healthcare: Integrating external medical data sources to assist healthcare professionals in quickly retrieving relevant case data or academic research during diagnosis, providing precise treatment suggestions.

- Legal Research: Quickly retrieving relevant legal provisions or cases when handling complex legal matters, helping legal teams improve research efficiency.

- Content Creation: Helping journalists or educational institutions retrieve the latest data or create customized teaching materials.

- Generating Insights: Querying existing documents such as annual reports, marketing documents, or social media comments to find answers that help better understand resources.

- Enterprise Knowledge Base: Successfully building enterprise-exclusive Q&A knowledge bases using tools like Hologres+PAI+DeepSeek, providing concise and accurate Q&A results, which can be embedded in intelligent Q&A customer service, AI shopping guides, and other business scenarios.

2. Large Language Model Fine-tuning (LLM Fine-tuning): Achieving Domain Specialization

While general AI models have broad capabilities, they often struggle to meet specific business needs because they lack an understanding of proprietary processes, industry jargon, or unique quality control procedures. For example, a pharmaceutical company needs AI that understands drug discovery terminology, while a law firm needs models that understand complex contractual language. Fine-tuning allows these pre-trained models to be adjusted to perform specific tasks more effectively or serve specific domains, transforming them from generalists into true business experts. This process optimizes model performance on target tasks by adjusting selected neural network weights without training from scratch, thereby avoiding high costs. Fine-tuning can customize model behavior to generate more accurate and contextually relevant outputs for tasks such as sentiment analysis, chatbot development, or Q&A.

Enterprise data preparation and fine-tuning process:

Effective fine-tuning relies on high-quality, targeted datasets. Data typically needs to be in formats like JSON/JSONL, supporting the implementation of Q&A or instruction fine-tuning. The fine-tuning process includes:

- Project Configuration: Setting access control, choosing private deployment to protect sensitive data.

- Base Model Selection: Considering the complexity of the target task, computational resources, context window requirements, and model architecture.

- Dataset Preparation: Collecting domain-specific examples, preprocessing (validation, truncation, tokenization, train/validation split). Research shows that doubling the dataset size linearly improves model quality.

- Hyperparameter Configuration: Adjusting parameters such as LoRA Alpha, LoRA Dropout, learning rate, and Epoch Count. Parameter-Efficient Fine-tuning (PEFT) combined with the LoRA method is often used to optimize computational requirements.

- Training Execution and Model Deployment: Loading base model weights, integrating LoRA adapters, executing training loops, tracking evaluation metrics, and optimizing inference.

Strategic Synergy of RAG and Fine-tuning

RAG and fine-tuning are not mutually exclusive options but complementary tools. RAG offers the advantages of dynamic updates and cost savings because it avoids full model retraining, while fine-tuning provides deeper domain expertise and improved performance for highly specific tasks, especially in scenarios where general models struggle.

- RAG for Timeliness and Breadth: It is ideal for scenarios requiring real-time information and access to broad, frequently updated external knowledge bases, without altering the core model.

- Fine-tuning for Depth and Specificity: It is best suited for embedding proprietary knowledge, specific tone of voice, output formats, or complex industry logic directly into the model’s parameters, making it a true “expert”.

Enterprises can combine RAG and fine-tuning to build more powerful AI systems. For example, a model can be fine-tuned to gain deep understanding and specific behaviors on core proprietary data, and then RAG can be used to augment its responses with the latest external or frequently updated internal documents. This offers the best of both worlds: deep domain understanding combined with real-time accuracy and traceability. This hybrid AI strategy is an inevitable trend for the widespread application of enterprise AI. Businesses need to identify which parts of their knowledge base require deeply embedded expertise (via fine-tuning) and which require dynamic, up-to-date retrieval (via RAG). This also implies the need for flexible AI infrastructure capable of supporting both methods simultaneously, possibly through platforms offering integrated RAG and fine-tuning services.

B. Enhancing AI Search Visibility: Generative Search Engine Optimization (GEO) Practices

As users turn to AI large models for intelligent Q&A, advertising agencies and businesses are also eyeing this new frontier of traffic. Generative Search Engine Optimization (GEO) is a content optimization service for AI conversational platforms, whose core purpose is to systematically optimize content visibility, ranking, and even traffic acquisition capabilities in AI conversations. This differs from traditional SEO (Search Engine Optimization), where the primary concern of GEO is no longer “can content rank on the first page of search engines,” but “can content become part of AI answers”. This marks a paradigm shift from “getting ranked” to “being cited and recommended by AI”.

1. GEO Core Concepts and AI Preferences

AI models, especially in generative search, prefer high-quality, authoritative, and well-structured content. They prioritize content that provides specific data, case studies, clear Q&A formats, and credible sources. For example, Google’s AI Overviews aim to provide clearer, more concise summaries, which will significantly impact traditional SEO strategies.

In AI search, the E-E-A-T principle (Experience, Expertise, Authoritativeness, Trustworthiness) is crucial. Google’s algorithms, including those used for AI-generated content, are designed to reward original and high-quality content that adheres to the E-E-A-T principle. This means that content must demonstrate real experience, deep expertise, come from authoritative sources, and be trustworthy to gain AI’s favor.

Current AI search faces a significant “trust deficit,” especially concerning the accuracy and commercial neutrality of commercial content. AI models inherently produce “hallucinations”—information that seems plausible but is actually incorrect. This trust issue is further exacerbated when commercial interests intervene without transparency. Users may not know if content has been paid for and optimized by third-party advertising agencies, making the ethical dimension more complex. This directly impacts user trust in AI-generated responses.

For Western businesses, simply “feeding” information to AI is not enough; businesses must prioritize providing verifiable, transparent, and genuinely useful content. GEO strategies must go beyond mere visibility and actively build trust. This means a stronger emphasis on the E-E-A-T principle, providing clear citations, and potentially advocating for industry standards around disclosure of AI-generated commercial content. The goal is not just to appear, but to appear credibly. This also foreshadows potential future regulations for AI-generated commercial content, similar to traditional advertising regulations.

2. Strategies for Organizing and Publishing High-Quality Enterprise Content

- Understanding User Intent and Long-Tail Keyword Optimization: In the AI era, user search behavior is shifting from short keywords to full sentences and complex queries. Businesses should use keyword analysis tools to identify these “contextual keywords” and “specification recommendation keywords” (long-tail keywords). Content should proactively answer these questions, provide complete solutions tailored to specific user situations, and include detailed comparisons or product features. It is strongly recommended to use real consumer questions to create professional FAQ sections.

- Building Topical Authority and Content Clusters: Businesses should build “topical authority” by creating content clusters around core themes, rather than optimizing for single points. This includes:

– Establishing a Pillar Page: An authoritative guide on the main topic.

– Establishing Cluster Pages: Multiple articles delving into various sub-topics.

– Strategic Internal Linking: Linking all sub-topic pages back to the pillar page, forming a tightly knit content network. This signals to AI that the business is an expert in that domain.

– Regular Content Updates: Search engines favor fresh, relevant content. Regularly updating outdated information ensures accuracy and relevance. - Multimedia Content Optimization and Structured Data Application: AI models are becoming increasingly multimodal. Businesses should:

– Include high-quality, optimized images and videos.

– Provide detailed alt text and descriptions for multimedia content.

– Use descriptive filenames, titles, and alt text for images.

– Consider developing interactive tools (e.g., calculators, assessment forms).

– Structured Data Markup: Implement JSON-LD structured data markup for key elements (e.g., product specifications, prices, addresses) to help AI efficiently understand and extract information.

3. Technical Optimization Practices: Ensuring AI Discoverability

- Native HTML Output and SSR/Pre-rendering: Critical SEO elements (e.g., title tags, meta name=”description”, structured data, main content) should be directly output in the raw HTML source code, rather than injected via JavaScript. Implementing Server-Side Rendering (SSR) or pre-rendering techniques (e.g., Next.js ISR, Nuxt SSR) allows the server to generate static HTML during initial page load, which helps LLM search platforms retrieve data more effectively.

- Sitemap Management and Index Status Checks: When there are new pages or significant updates, sitemaps should be regularly updated and actively submitted to Google and LLM search platforms that support API submission. Use tools like Google Search Console’s “URL Inspection Tool” to monitor index status and confirm whether content has been cited by AI.

- Controlling Content Dynamism and Rendering Costs: Pages heavily relying on JavaScript are less friendly to search bots, with an average crawl speed more than 9 times slower than static pages. It is recommended to staticize non-essential information as much as possible to improve overall rendering efficiency and ensure AI crawlers can easily discover content.

Beyond keywords, content granularity, structure, and semantic richness, especially achieved through structured data, are becoming primary metrics for AI search visibility (GEO). Generative AI models excel at extracting specific answers from well-organized text. Fragmented, unstructured, or overly dynamic content is difficult for AI to parse and accurately incorporate into its answers. Structured data (JSON-LD) provides clear semantic cues, making it easier for AI to understand the context and relationships of information, thereby increasing the likelihood of being cited and accurately synthesized. This directly improves the “machine readability” of content. For Western businesses, this means fundamentally rethinking their content creation and publishing processes. It’s not just about writing for human readers or traditional search algorithms; it’s about structuring content for machine readability and extractability. This implies investing in content architecture, semantic markup, and potentially adopting new content management systems to facilitate this granular, structured approach. It also suggests that “technical SEO” in the AI era is more about content engineering than traditional webmaster tasks.

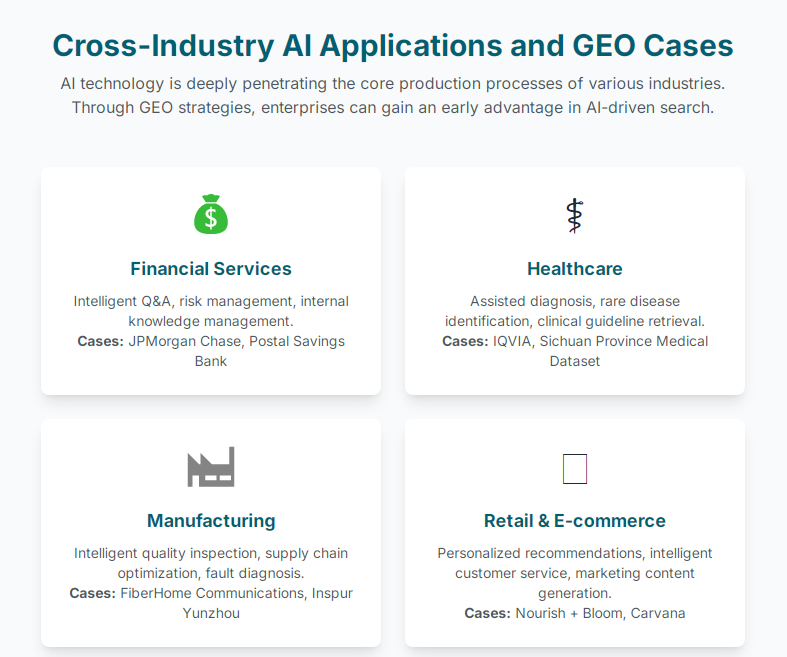

C. Cross-Industry AI Applications and GEO Case Studies

AI large models are demonstrating immense application potential across various industries and providing new avenues for businesses to enhance visibility through GEO.

Financial Services Industry

- AI Application: AI has been embedded in various financial service processes for data analysis, operational efficiency improvement, customer service optimization, and marketing. For example, automated loan/insurance approval, fraud prevention, risk management, algorithmic trading, and compliance matters. AI inference can help banks detect fraud and unsafe transactions in real-time and protect accounts through voice authentication.

- Knowledge Base/RAG: Financial institutions are using LLM-based intelligent assistants for internal knowledge management, such as JPMorgan Chase’s LLM Suite helping employees write emails and reports. Postal Savings Bank of China’s intelligent Q&A system, a joint-stock bank’s intelligent customer service based on Tencent Cloud TI-OCR large model, and another bank’s AI knowledge middle platform built in collaboration with Zhipu AI GLM-130B, applied in investment banking, WeChat marketing, and compliance Q&A. Bank of Beijing and Bank of Shanghai also utilize AI to build intelligent office assistants.

- GEO Strategy: Financial institutions can optimize whitepapers and industry reports through structured data and E-E-A-T principles for AI to recognize and use for financial analysis and investment insights. For example, HithinkGPT, Tonghuashun’s financial large model, is optimized for financial Q&A, investment consulting, and analysis.

Healthcare Industry

- AI Application: AI assists in patient care, disease trend analysis, diagnosis, and drug discovery. For example, AI-assisted diagnosis can identify abnormalities in brain scans or extra heartbeats, early detection of rare diseases, and enhanced clinical research.

- Knowledge Base/RAG: AI models like CrossNN analyze epigenetic features of tumor cells for non-invasive cancer diagnosis. AI agents can provide daily clinical decision support and help doctors access the latest treatment guidelines, significantly reducing “AI hallucinations”. IQVIA builds an automated medical AI platform based on longitudinal patient data to identify patients in real-time and predict incidence risk and disease progression. Sichuan Province has built datasets for 120 advantageous diseases and 1000 sub-types, including clinical cases from over 100 experienced traditional Chinese medicine practitioners, forming a standardized data resource system covering the entire diagnosis and treatment chain to support professional AI model training.

- GEO Strategy: Healthcare providers can publish clinical guidelines, research papers, and patient education materials on authoritative medical platforms, adopting clear structures and expert authorship to ensure AI models can retrieve and cite this content for diagnostic suggestions or treatment recommendations.

Manufacturing Industry

- AI Application: AI is transforming manufacturing, from factory floors to supply chain management, improving efficiency and reducing human error. This includes industrial robots performing repetitive or dangerous tasks, AI optimizing supply chain and inventory management, and industrial analytics identifying bottlenecks.

- Knowledge Base/RAG: AI-based quality inspection can significantly improve efficiency and accuracy. For example, FiberHome Communications applies a visual inspection platform for AI quality inspection in PCBA board manufacturing, significantly reducing the outflow rate of low-level defects and saving about 1 million yuan in labor costs annually. Inspur Yunzhou’s Zhiyie large model, based on 40 years of operational experience from 20 experienced masters, increased the qualified rate of carbon black products from 82% to 94%.

- GEO Strategy: Manufacturing enterprises can publish technical specifications, maintenance manuals, and operational best practices on industry portals or company official websites. Optimizing these documents with structured data and clear, concise language enables AI models to assist with fault diagnosis, predictive maintenance, and operational guidance.

Retail and E-commerce

- AI Application: AI enhances consumer experience, reduces losses, and increases profits through better inventory management, personalized recommendations, and chatbots. For example, Nourish + Bloom, the first AI-powered autonomous grocery store in the Southern US, provides a frictionless, contactless shopping experience. AI is also used to generate marketing content (60%), predictive analytics (44%), personalized marketing and advertising (42%), and digital shopping assistants (40%).

- Knowledge Base/RAG: Chatbots utilize natural language processing to understand user needs and assist with product search.

- GEO Strategy: E-commerce platforms should list product information and specifications in detail and actively manage user reviews. Retailers can optimize product descriptions, FAQs, and customer support content to ensure AI models can accurately answer product-related queries and provide personalized recommendations. For example, the automotive company Carvana has produced 1.3 million unique AI-generated videos tailored to the customer experience journey.

Content Marketing and Enterprise Knowledge Base

- AI Application: Generative AI is revolutionizing content creation, personalization, and operational automation in marketing. It can automate product description writing, summarize customer feedback, generate SEO titles, and even propose creative assets for marketing campaigns.

- Knowledge Base/RAG: AI knowledge graphs are applied in intelligent warehousing, intelligent review, intelligent Q&A, master data graphs, intelligent search, and intelligent recommendation. For example, State Grid Jiangsu Electric Power Company has built a leading power knowledge platform based on knowledge graphs.

- GEO Strategy: Businesses can leverage GEO services to ensure their brand is prominently displayed in AI search results. This includes crafting high-quality content with specific data, case studies, clear Q&A formats, and authoritative sources to align with LLM preferences. For instance, when a solid waste treatment equipment company was asked “Which solid waste treatment equipment manufacturers are there?” on DeepSeek, its company name appeared first in the answer. Cloud Vision AI 3.0, supported by iFlytek and Volcano Engine, significantly shortens the production cycle of enterprise marketing videos.

Table 3: Cross-Industry AI Applications and GEO Cases

| Industry | AI Application/GEO Strategy | Specific Case/Company | Key Outcomes/Benefits |

|---|---|---|---|

| Financial Services | Intelligent Q&A, risk management, marketing, internal knowledge management | Postal Savings Bank, JPMorgan Chase, Zhipu AI GLM-130B, Tonghuashun HithinkGPT | Improved customer service efficiency, reduced risk, accelerated report writing, precise investment consulting |

| Healthcare | Assisted diagnosis, rare disease identification, clinical guideline retrieval | CrossNN model, IQVIA, Sichuan Province Medical Dataset Construction | Improved diagnostic accuracy, accelerated drug discovery, optimized patient care |

| Manufacturing | Intelligent quality inspection, supply chain optimization, fault diagnosis, industrial analysis | FiberHome Communications, Inspur Yunzhou Zhiyie Large Model, Black Cat Group | Improved product qualification rate, reduced waste, optimized production processes |

| Retail & E-commerce | Personalized recommendations, intelligent customer service, marketing content generation, inventory management | Nourish + Bloom, Carvana | Enhanced consumer experience, reduced losses, improved marketing efficiency |

| Content Marketing/Knowledge Base | Automated content creation, knowledge graph construction, AI search optimization | Cloud Vision AI 3.0, State Grid Jiangsu Electric Power Company, Solid Waste Treatment Equipment Company | Shortened content production cycle, improved knowledge management efficiency, increased brand exposure |

IV. Advice for Western Businesses and Future Outlook

A. Addressing Challenges and Risks

While embracing the opportunities presented by Chinese AI large models, Western businesses must also clearly recognize and actively address potential challenges and risks.

- AI Hallucinations and Information Accuracy: AI models can produce “hallucinations”—information that seems plausible but is actually incorrect. If business information is misrepresented, or if critical decisions are made based on AI without human oversight, this could pose significant risks to corporate reputation. Western businesses must implement rigorous validation mechanisms for AI-generated content and maintain human-in-the-loop processes for sensitive applications.

- Data Security, Privacy, and Compliance: Using proprietary or sensitive data to train AI models carries the risk of data extraction attacks (e.g., model inversion, leading to intellectual property theft or black-box extraction of adversarial attacks). Furthermore, using confidential information for fine-tuning also poses data leakage risks. Western businesses must prioritize data sovereignty and privacy, potentially needing to keep sensitive data local and implement strict access controls. Compliance with evolving data protection regulations (e.g., GDPR, China’s Personal Information Protection Law) is crucial.

- Intellectual Property and Model Misuse Risks: The use of large, often unverified datasets for training raises questions about intellectual property and licensing. Additionally, models may be used for malicious purposes or to generate copyrighted content. Businesses need to be aware of these risks and develop appropriate internal policies and technical measures to mitigate them.

B. Strategic Recommendations and Future Outlook

Facing the rapid development of Chinese AI large models, Western businesses need to adopt proactive, prudent, and adaptive strategies.

- Active Engagement and Understanding: Western businesses should invest resources to deeply understand the unique aspects of the Chinese AI ecosystem, including its technical characteristics, data preferences, and policy orientations. This may involve establishing partnerships with Chinese AI companies or investing in AI experts with a deep understanding of the Chinese market.

- Data Governance and Quality: Given AI models’ preference for high-quality, structured data, businesses should focus on improving the quality and manageability of their own data assets. This means investing in data governance frameworks, data cleaning tools, and semantic markup technologies to ensure their proprietary information can be efficiently recognized and utilized by AI models.

- Ethical AI Development: When utilizing AI, businesses must adhere to responsible AI principles, including transparency, explainability, fairness, and privacy protection. In AI-generated content, especially in GEO practices, it is advisable to disclose the fact that it is AI-assisted to build user trust and comply with potential future regulatory requirements.

- Continuous Adaptation and Learning: The AI field is evolving extremely fast, and businesses need to establish mechanisms for continuous learning and adaptation. Regularly evaluate the latest advancements in AI technology and adjust internal strategies and technology stacks to remain competitive.

- Adoption of Hybrid AI Strategies: Businesses should view RAG and fine-tuning as complementary rather than alternative tools. By fine-tuning for core business logic and proprietary knowledge, while leveraging RAG for dynamic, real-time updated external information, an AI system that is both deeply specialized and flexibly responsive can be built. This hybrid strategy maximizes accuracy, relevance, and cost-effectiveness.

Looking ahead, AI large models will continue to profoundly impact the global business landscape. In the Chinese market, as large models penetrate the real economy from “pilot verification” to “value creation”, especially in traditional industries such as government affairs, central state-owned enterprises, finance, healthcare, and manufacturing, AI will be more deeply integrated into core production processes. If Western businesses can effectively navigate the “knowledge sources” of Chinese AI large models and present their information in an AI-friendly manner, they will be well-positioned to achieve business growth and innovative breakthroughs in this emerging digital economy.

Unlock 2026's China Digital Marketing Mastery!

Unlock 2026's China Digital Marketing Mastery!