How SEO’s Past Foretells GEO’s Future, A Strategic Mandate for Your Business

- On November 7, 2025

- geo, GEO strategy, GEO tips, SEO GEO

Executive Summary: The 2026 Digital Divide

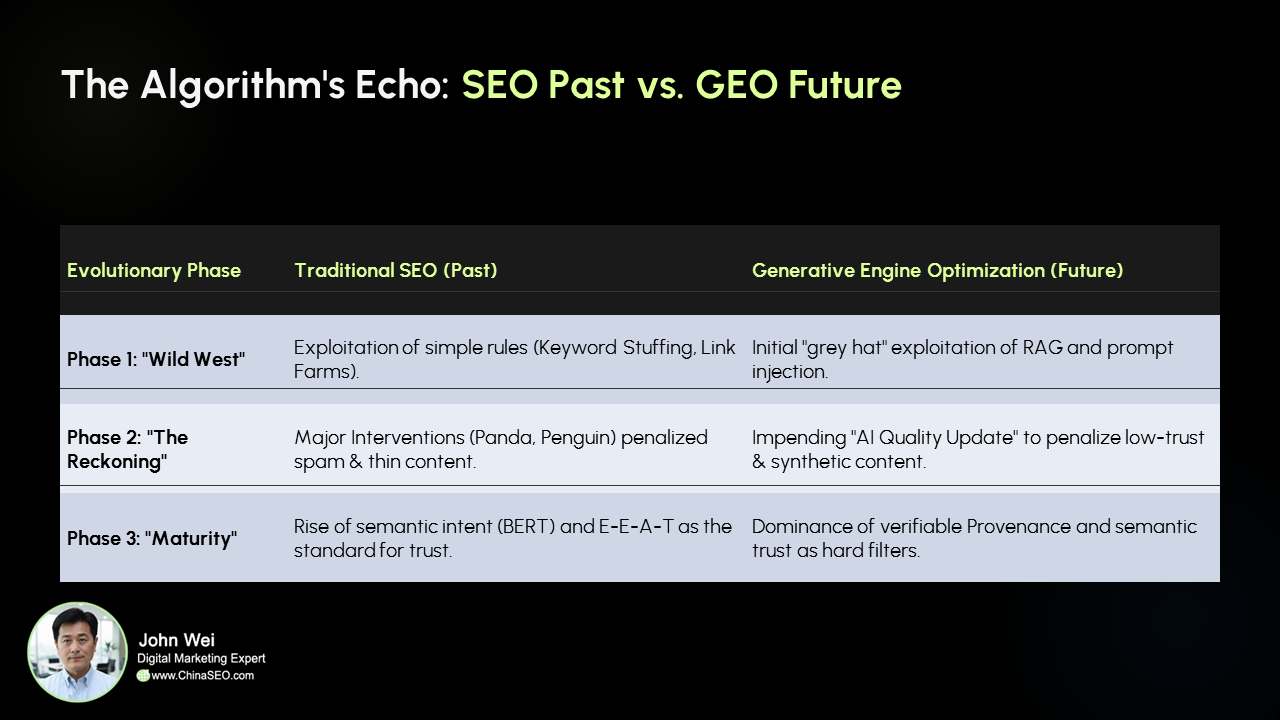

The history of traditional Search Engine Optimization (SEO)—from its early “wild growth” exploiting simple rules to its disciplined evolution governed by principles of trust and authority—provides an undeniable blueprint for understanding the future trajectory of Generative Engine Optimization (GEO: Generative Engine Optimization). Generative Engines (GEs), such as Google’s SGE or LLM-based chatbots, are reshaping the digital discovery paradigm, shifting the competitive focus from the traditional “Page Rank” to the emerging “Answer Rank”.

The core thesis of this report is that GEO is currently in a chaotic initial phase, similar to SEO over a decade ago, rife with grey or black hat strategies exploiting new technology vulnerabilities. However, this period of disorder will be short-lived. Historical precedent indicates that major algorithmic intervention is unavoidable and will force the market toward maturity.

For your business leaders, the strategic mandate is urgent. Analysts predict a critical time window for establishing a foundational presence in generative answers that is set to close by mid-2026. Businesses that fail to proactively transition their strategy from traditional keyword optimization to a GEO approach based on Retrieval-Augmented Generation (RAG) and semantic trust during this period face a significant digital divide, losing structural competitive advantage in the AI-driven search ecosystem.

Part I: The Foundation of Digital Trust: SEO’s Journey to E-E-A-T

The evolution of SEO is not just a technical story, but a narrative about the gradual establishment of digital trust and quality standards. This trajectory offers a crucial reference frame for predicting GEO’s future.

I.A. The Era of Unconstrained Manipulation (Pre-2011)

Before Google’s massive algorithm adjustments, the SEO field resembled a “Wild West,” characterized by high-volume, low-quality strategies exploiting simple algorithm loopholes. Competition was based on mechanical input quantity, often disregarding user intent and content quality.

Typical tactics included Keyword Stuffing, buying Link Directories, and establishing Content Farms—all designed to manipulate rankings rather than serve users. In the absence of major intervention, search results were frequently dominated by low-value, information-poor content, severely damaging the user experience. This neglect of user needs ultimately led to the search engines’ “crisis of trust” and catalyzed subsequent algorithm adjustments.

I.B. The Algorithmic Reckoning: Standardization and Maturity

Two major algorithm updates between 2011 and 2012 marked a decisive turning point for the SEO industry, enforcing a compliance shift from black hat to white hat strategies.

1. The Panda Update (February 2011): Mass-Targeting Quality Issues

Panda was a large-scale algorithm update directly aimed at lowering the rank of “thin sites,” content farms, and duplicate content. This update profoundly impacted SEO, forcing the industry to recognize that content originality and depth were the cornerstones of long-term visibility. Businesses had to transition from mere publishers seeking content volume to professional producers focused on high-quality output.

2. The Penguin Update (April 2012): Combating Manipulative Links and Spam

Following Panda, the Penguin update targeted webspam strategies, specifically punishing keyword stuffing and link schemes engineered to manipulate search rankings. It sent a clear signal: attempts to artificially inflate domain authority through deceptive means would be severely penalized. These two updates jointly formed the “regulatory hammer,” establishing that long-term digital visibility must adhere to evolving quality standards.

I.C. Quality Principles: The Rise of E-E-A-T and Context

After penalizing low-quality content and manipulation, algorithms began to evolve toward deeper semantic understanding. Subsequent updates, such as Hummingbird and BERT, signaled a major technical shift toward Natural Language Processing (NLP). BERT, which stands for Bidirectional Encoder Representations from Transformers, was designed to help Google better understand the nuances and context of search queries, rather than just matching exact keywords.

BERT’s introduction was more than a ranking tweak; it was a technical foundation for purely semantic systems. This shifted the measure of SEO success from, “Did I include the keyword X times?” to, “Did I fully answer the user’s underlying question?” This prepared the ground perfectly for the future GEO paradigm.

Consequently, the contemporary SEO standard—E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness)—became the non-negotiable benchmark, especially for high-risk (“Your Money or Your Life” – YMYL) content. E-E-A-T demands that websites not only have technical health (like HTTPS security, good mobile experience) but also build a verifiable digital reputation through reliable sources and qualified authors. SEO matured into a rigorous discipline focused on establishing and maintaining digital trust.

SEO history clearly demonstrates a predictable cycle of algorithm recurrence: New vulnerability discovered $\rightarrow$ Black hat mass exploitation $\rightarrow$ Major search engine intervention $\rightarrow$ Industry forced maturity and quality standards. GEO is currently in Phase Two (mass exploitation), signaling that a massive AI quality update is inevitable. Businesses must act proactively, adopting the E-E-A-T mindset of SEO’s maturity phase for their GEO strategy before the AI quality update brings penalties.

Part II: Generative Engine Optimization (GEO): The New Algorithm Frontier

Generative Engine Optimization (GEO) represents a profound paradigm shift in digital marketing. It is no longer about pushing a website to the top of the search results page, but about establishing brand content as an authoritative source for AI-generated answers.

II.A. Defining the Generative Shift and Answer Rank

GEO is defined as the practice of adapting digital content and online presence management to improve visibility in results produced by Generative Artificial Intelligence.

1. The Focus Shift: From Rank to Answer

The output focus of traditional SEO was achieving a higher position in search results. The measure of GEO success, however, is “Answer Rank”: ensuring the enterprise content is selected by the Generative Engine as the optimal source for its conversational, step-by-step response. This is a major change requiring content to be adaptive, multimodal (including images, video, and interactive media), and prioritized for context, clarity, and conversational tone over keyword density.

II.B. The Technical Backbone: Retrieval-Augmented Generation (RAG)

To understand how to optimize for GEO, businesses must first grasp the technical backbone driving generative search: Retrieval-Augmented Generation (RAG).

RAG is the key technology modern LLMs (like Google SGE or Anthropic Claude) use to retrieve semantically relevant content from external sources and base their answers on it, significantly reducing AI “hallucinations” and improving factual consistency. The RAG system first retrieves content via similarity search, then uses those materials to generate a grounded, factual, and personalized response.

The Technical Complexity of the RAG Optimization Blueprint

RAG optimization is far more complex than simple page SEO, involving a multi-stage process focused on the quality of the retrieval component: Text Segmentation, Embedding Model Fine-tuning, and Reranker Model Fine-tuning. This means GEO success hinges on a deep understanding of the underlying mechanics of how content is chunked and retrieved within the LLM’s knowledge base.

II.C. New Optimization Levers (White Hat GEO Strategy)

Successful white hat GEO strategies require content producers to embrace structured data and semantic intent, providing clean and efficient input for RAG systems.

1. Optimizing for Conversational Intent

Content must anticipate and fully satisfy user intent. This means using long-tail, question-based keywords (e.g., rather than searching “best running shoes,” searching “What are the best running shoes for beginners?”), and writing in a natural, conversational tone. By answering related questions within the content, businesses preemptively satisfy user needs, increasing the chance of being selected by the AI as the source.

2. Structured Data as AI Fuel

Schema Markup and structured formats (like headings, lists, tables) are crucial for GEO. Schema Markup (especially JSON-LD) acts as a clean, parseable data stream fed directly to the RAG system. Structured data ensures the AI can quickly extract accurate facts and definitions from a page and use them for “grounding” citations in its generated answer.

3. The Technical Necessity of Content Chunking

RAG systems work by breaking documents into smaller semantic units, called “chunks”. For content creators, optimizing chunking is key to ensuring only coherent and relevant snippets of information are retrieved.

Strategy requires content teams to adopt either structure-based (Document-based chunking, e.g., leveraging Markdown, table structure) or semantics-based (Agentic chunking, allowing the LLM to determine splits based on paragraph type or instruction) strategies. Lack of control over content granularity can lead RAG systems to include undesirable or misleading information. Optimization must thus ensure each chunk is a coherent semantic unit, designed to prevent misinformation injection into the LLM’s answer.

Extrapolating from E-E-A-T’s role in SEO history, its status in RAG systems has moved from a soft signal to a hard gating factor. AI systems are designed to source answers from “trustworthy sources”. If content lacks sufficient E-E-A-T signals (e.g., lack of credentialed authors, rigorous citations), RAG’s initial retrieval mechanism may filter it out directly, preventing it from being used to generate the answer, regardless of surface optimization. Therefore, businesses must invest in verifiable domain authority and author credentials.

Furthermore, because RAG systems rely on similarity search to retrieve relevant content, the foundation of semantic search—vector embeddings and vector databases—becomes a necessary component of the GEO strategy. Budgeting for vector infrastructure (including data quality preprocessing and advanced indexing) is no longer a technical upgrade but a prerequisite for scalable GEO. Vector databases enable real-time insights, optimize data storage and retrieval, and thus support hyper-personalized marketing campaigns and real-time content adjustment, making ad spend more efficient.

To illustrate this strategic pivot, here is a comparison of SEO and GEO focus across key dimensions:

Strategic Investment Comparison: SEO vs. GEO Focus

| Optimization Dimension | Traditional SEO Priority | Generative Engine Optimization (GEO) Priority | Business Implication |

| Goal | Higher position in SERP (Page Rank). | Becoming the authoritative generative answer (Answer Rank). | Requires KPI shift from clicks/rankings to visibility and conversion attribution. |

| Content Format | Text-based pages, high keyword density. | Structured data, conversational language, multimodal assets (video/images). | Mandates technical investment in Schema and multimedia optimization. |

| Data Backbone | Relational databases, keyword metrics. | Vector databases, semantic relevance, real-time user behavior analysis. | Prerequisite for hyper-personalized marketing and RAG-friendly content delivery. |

| Defense Strategy | Toxic link audit. | Neural Reputation Risk Monitoring and content source verification. | Urgent need to audit external content that influences LLM training data. |

Part III: The Impending Crisis: Content Poisoning and GEO Grey Strategies

SEO history teaches us that manipulation attempts scale rapidly before a new algorithm environment stabilizes. With the proliferation of LLMs, the threats facing GEO far exceed traditional SEO spam, centering on Massive Content Contamination and Neural Reputation Risk.

III.A. The Evolution of SEO Poisoning and Automated Malice

Traditional SEO poisoning is a cyberattack technique aimed at manipulating search rankings to place malicious websites prominently in search results. Generative AI is fundamentally changing the scale and nature of this attack. Attackers are already using LLMs to create vast, interconnected networks of malicious sites, each with unique, high-quality content that is “virtually indistinguishable from legitimate sources”.

The scalability risk posed by this technique is unprecedented. A single threat actor could theoretically monitor trending searches in real-time, auto-generate relevant malicious content, and optimize its rank, thereby poisoning search results for thousands of keywords simultaneously without human intervention.

III.B. Neural Reputation Risk and Content Contamination

This is one of the most significant and subtle risks within the GEO landscape. Large Language Models (LLMs) are often fine-tuned or retrained on vast amounts of data scraped from public platforms (such as X/Twitter, Instagram, TikTok) and forums (like Reddit). These data sources are often filled with engagement-driven, emotionally charged, or synthetically generated content.

1. AI Model’s “Cognitive Decline”

When LLMs ingest this contaminated data, they suffer “cognitive decline”—meaning they lose reasoning capability, contextual understanding, and factual consistency over time. This phenomenon is termed “Neural Reputation Risk” and describes the gradual decline in the trustworthiness and performance of an AI model due to ingesting misleading or manipulative data.

2. Direct Impact on Brand Reputation

Content contamination directly affects corporate reputation. If LLMs extract commentary from platforms like Reddit, which can skew extreme, it creates selection bias, leading the AI system to misrepresent the brand or its products. Over-reliance on AI or unreliable AI-generated answers can dilute brand essence and cause “chatbot fatigue,” damaging customer trust, reliability, and professionalism.

Under this new threat, the primary risk shifts from the traditional “losing rank” to “losing trust,” as the AI system may distort a brand’s expertise or image. As Warren Buffett stated: “It takes 20 years to build a reputation and five minutes to ruin it.” Businesses must pivot from passive content auditing (checking only their own sites) to proactive Neural Reputation Monitoring, tracking brand discussion and citation on external platforms that feed LLM training data.

III.C. Global Digital Intervention and Regulatory Reaction

The risk of AI-driven manipulation is globally recognized. Multilingual studies confirm that generative tools increase the structural threat level of digital interference, making it harder to distinguish genuine from synthetic content. For example, VIGINUM, France’s digital interference monitoring service, tracks and analyzes foreign digital interference operations, particularly those using generative AI for the mass dissemination of false or misleading information.

Global regulators, such as the European Data Protection Board (EDPB), are actively addressing AI deception and misuse, requiring policies on transparency, data source integrity, and AI deception detection. AI developers themselves (such as OpenAI and Anthropic) enforce strict moderation policies prohibiting the use of their models for deception, harassment, and illegal activities. This means companies face direct legal and reputational risks if their GEO practices lack proper policies and safeguards.

III.D. Technical Exploitation of RAG Systems

High-level GEO black hat strategies will target the core mechanism of RAG systems. The inherent weakness of RAG systems lies in the selection of content “chunks”. Attackers may optimize short, misleading “poisoning chunks” (e.g., under 500 characters) to pass semantic similarity thresholds. This allows non-factual data to be injected directly into the LLM-generated answer, achieving precise manipulation even if the overall document structure is sound.

Furthermore, black hat strategists will focus on manipulating Reranker Models (the final filtering layer after retrieval) rather than surface-level optimizations like meta tags, as was common in traditional SEO poisoning.

Content contamination forms a causal loop: Generative AI is used to mass-produce seemingly unique content $\rightarrow$ this content spreads rapidly on social media and web platforms $\rightarrow$ LLMs scrape and ingest this engagement-optimized content for training $\rightarrow$ LLM factual accuracy drops, leading to “cognitive decline” $\rightarrow$ the model generates more low-quality content, and the cycle restarts. Therefore, compliance teams must collaborate with marketing teams to set clear AI content disclosure and quality standards to manage reputational risks and comply with emerging legal requirements.

Part IV: Convergence: GEO’s Future and Strategic Roadmap

GEO’s future is not the replacement of SEO, but the fusion of the two into a more comprehensive and complex digital visibility discipline, a unified “Search Optimization” (A/SEO) practice.

IV.A. Fusion, Not Substitution: The End of SEO vs. GEO

Just as social media did not replace search engines, GEO will integrate into the existing framework of digital marketing. Traditional SEO practices remain the foundation: technical health, speed, mobile experience, and basic keyword intent analysis are prerequisites for AI content scraping and data trust. While experts may rebrand themselves as GEO specialists if LLMs become the primary source of discovery, the core skill—adapting to changing algorithmic demands—will remain constant.

IV.B. 2024–2026 Strategic Investment Mandate

Based on the understanding of the algorithm recurrence cycle, businesses must act immediately. The window for establishing an AI-generated answer foundation is rapidly closing; analysts expect market dominance to solidify by mid-2026 among brands that implemented comprehensive GEO strategies during the 2024-2025 period.

This urgency is based on the fact that early investment ensures content is correctly embedded in the RAG vector space and established as a high-authority source, forming a long-term competitive moat. Executives must view GEO investment as a competition for structural cognitive space within AI models.

1. Vector Infrastructure Investment

As noted earlier, prioritizing the shift to vector databases is necessary to access real-time semantic insights and implement advanced RAG systems. Vector databases enable semantic retrieval, content chunk optimization, and support real-time content adjustment—key technical requirements for scalable, personalized GEO strategies.

IV.C. Scaling Local Authority Through Programmatic Content (The PPP Model)

As GEO increases the demand for highly relevant, context-rich content, the Programmatic, Parameterized, or Page-Per-Place (PPP) content strategy becomes critical for scaling local authority.

1. The High-Intent Conversion Opportunity

Searches targeting local intent (e.g., “car accident lawyer Seattle”) often have the highest conversion rates. GEO-Targeted PPP articles are designed to efficiently capture this high-value traffic.

2. How PPP Articles Work

GEO-Targeted PPP articles are structured content tailored to a specific geographic location (city, neighborhood) and a specific service/product. For example, a law firm might publish “Truck Accident Attorney in Tacoma” alongside similar, structurally consistent but content-customized articles for Seattle, Olympia, and Spokane.

This approach allows content production to scale efficiently while avoiding the thin or duplicate content issues traditionally penalized by the Panda update. By customizing localized content (mentioning landmarks, community references), these articles feel more authentic to readers and send stronger local signals to the generative engine. They provide the precise, context-rich content snippets required for RAG systems to generate helpful, geographically relevant answers. The PPP strategy successfully merges SEO’s historical focus on high-intent conversion with GEO’s need for scalable, structured semantic relevance.

IV.D. The Necessity of Human Oversight

While AI tools can accelerate the content workflow and generate drafts, relying solely on raw AI output is insufficient . Pure AI-written blogs often lack brand voice, nuance, and human originality, making it difficult to achieve a high E-E-A-T score. This can lead to brand dilution and “chatbot fatigue”.

Therefore, businesses must adopt a “Human-in-the-Loop” model: AI should be used for brainstorming and drafting, but the final content must undergo rigorous human editing to add unique insights, maintain authenticity, and ensure the highest levels of expertise and trustworthiness.

The table below summarizes key SEO historical updates and their corresponding predicted challenges and mitigation strategies in the GEO future:

Key SEO Algorithm Updates and their GEO Counterparts

| SEO Algorithm Update (Past) | Year | Focus / Core Penalty | GEO Counterpart (Future Prediction) | Focus / Core Mitigation |

| Panda | 2011 | Penalized thin, low-quality, duplicate content (content farms). | AI Quality Update (Q-RAG Audit) | Penalizes generic AI-generated text lacking E-E-A-T and originality. |

| Penguin | 2012 | Targeted manipulative link schemes and keyword stuffing (webspam). | Neural Reputation Risk Filter | Detects and ignores manipulative social/forum signals used for RAG sourcing. |

| Vince/Brand Trust | 2009 | Favored established brands with reputation signals. | Provenance Verification | Prioritizes content from domains demonstrating verifiable E-E-A-T across the knowledge graph. |

| BERT/Hummingbird | 2013/2019 | Improved context understanding and conversational intent (NLP). | Vector Database Alignment | Prioritizes content optimized (chunked) for semantic retrieval and conversational clarity. |

Conclusion and Prescriptive Recommendations

The recurrence of algorithms is a given in digital marketing. SEO history provides a clear blueprint for GEO: the current phase of exploration and exploitation will inevitably lead to a massive quality reckoning. For businesses, now is the critical moment to shift digital marketing from passive rank-chasing to proactive, structural AI advantage building.

A. Strategic Summary: The Competition for Structural Advantage

Building GEO authority is not an operational expenditure like PPC; it is a structural asset. Early optimization ensures content is correctly embedded in the RAG system’s vector space and established as an authoritative source, creating a high barrier to entry for competitors. This investment is the only path to securing long-term competitive advantage and avoiding being left behind in the 2026 digital divide.

B. Prescriptive Recommendations for Your Businesses

To navigate the coming GEO shift, CMOs and digital strategy leaders must immediately adopt these five strategic steps:

- Audit Technical Health Immediately (Lay the Foundation): Ensure fundamental SEO hygiene (E-E-A-T, Structured Data, Technical SEO) is impeccable, as these remain the non-negotiable entry points for RAG content retrieval.

- Establish Vector Data Infrastructure (Technical Upgrade): Prioritize investment in vector databases to enable deep semantic search, content chunk optimization, and support the real-time content adjustment required by LLMs.

- Implement Neural Reputation Risk Monitoring (Defense Mechanism): Actively monitor and counter misinformation or content manipulation targeting brand reputation, particularly on social platforms and forums that serve as key data sources for LLMs.

- Scale Local Presence via PPP (Growth Strategy): Adopt the GEO-Targeted PPP strategy to efficiently capture high-intent local traffic, translating AI’s conversational search focus into measurable conversions.

- Mandate Human Oversight (Maintain Trust): Use AI as an accelerator, not an author. All content must undergo rigorous human review to inject unique insights, maintain authenticity, and ensure the E-E-A-T signals that raw AI output lacks.

10 Essential FAQs on the Future of Generative Engine Optimization (GEO)

1. What is Generative Engine Optimization (GEO), and how does it fundamentally differ from traditional SEO?

Answer: GEO is the practice of adapting your digital content and online presence to improve visibility in results produced by Generative AI.1 The key difference is the objective: SEO targets Page Rank (achieving a high position in traditional blue links), while GEO targets Answer Rank (ensuring your content is selected as the authoritative source for the AI’s conversational response).2

2. Why do you argue that GEO will inevitably repeat SEO’s history, moving toward regulation?

Answer: SEO history shows a predictable cycle: new vulnerability $\rightarrow$ black hat exploitation $\rightarrow$ forced algorithmic intervention.3 GEO is currently in the exploitation phase, with “content poisoning” tactics.3 However, AI platforms’ credibility is tied directly to the trustworthiness of their answers. Major algorithmic interventions—similar to the Panda and Penguin updates—are unavoidable to force market maturity and preserve user trust in the AI.3

3. What is the “2026 Digital Divide,” and why is the strategic time window closing?

Answer: The “2026 Digital Divide” refers to the deadline (projected by analysts as mid-2026) by which brands that implemented comprehensive GEO strategies will have solidified their dominant positions in generative answers.4 Failure to secure this foundational presence by that time means losing a structural competitive advantage in the AI-driven search ecosystem, making it exponentially harder for late adopters to catch up.3

4. Why is the RAG (Retrieval-Augmented Generation) system the new technical focus for GEO?

Answer: RAG is the core technology modern LLMs use to retrieve semantically relevant facts from external sources before generating an answer, which significantly reduces AI “hallucinations”.5 GEO success hinges on optimizing RAG components like Content Chunking and Structured Data so that the AI can efficiently retrieve precise, accurate facts from your website to “ground” its response.3

5. How has the E-E-A-T framework evolved from a soft signal to a “hard gating factor” in GEO?

Answer: In the SEO era, E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) was a critical signal; in the GEO era, it’s a hard gating factor.3 AI systems are designed to source answers only from “trustworthy sources.” If your content lacks strong E-E-A-T signals (e.g., verifiable author credentials, unique experience), the RAG system may filter it out before retrieval, preventing it from ever being used to generate an answer.3

6. What is “Neural Reputation Risk,” and how does it shift the nature of brand defense?

Answer: Neural Reputation Risk is the gradual degradation of an AI model’s trustworthiness due to ingesting misleading or emotionally charged data, particularly from public social platforms like Reddit or X/Twitter.6 The risk shifts from “losing rank” to “losing trust,” as the AI system may misrepresent your brand based on this polluted external data.7 Companies must adopt proactive Neural Reputation Monitoring to track these data sources.3

7. Will GEO replace traditional SEO, or are they two separate departments?

Answer: GEO will not replace SEO; they are rapidly converging into a single, unified “Search Optimization” practice.8 Traditional SEO (technical health, mobile experience, keyword intent) remains the necessary foundation that ensures AI crawlers can access your content.3 GEO builds the authoritative layer on top of this foundation to secure the “Answer Rank.”

8. Why is mandatory human oversight (“Human-in-the-Loop”) essential for GEO strategy?

Answer: Relying solely on raw AI output often results in content that lacks brand voice, nuance, and human originality, thus failing to achieve the high E-E-A-T scores required for GEO.9 The “Human-in-the-Loop” model requires AI for acceleration and drafting, but mandates rigorous human editing to inject unique insights and ensure verifiable experience, which are crucial for maintaining trust.3

9. What specific technical infrastructure investment is now mandatory for scalable GEO success?

Answer: Investment in Vector Databases is a prerequisite for scalable GEO.3 Unlike traditional relational databases, vector databases enable semantic search, which RAG relies on for efficient content retrieval. This infrastructure supports advanced indexing, content chunk optimization, real-time content adjustment, and hyper-personalized marketing campaigns.10

10. What is the GEO-Targeted PPP strategy, and why is it a recommended growth mechanism?

Answer: PPP (Programmatic, Parameterized, or Page-Per-Place) is a strategy for efficiently scaling local authority.12 It involves creating structured content pieces tailored to specific geographic locations and services (e.g., “Truck Accident Attorney in Tacoma” vs. “in Seattle”).12 This method efficiently captures high-intent local traffic while providing RAG systems with the precise, context-rich snippets needed for geographically relevant answers.3

Unlock 2026's China Digital Marketing Mastery!

Unlock 2026's China Digital Marketing Mastery!